Re: APOD: Webb's First Deep Field (2022 Jul 13)

Posted: Wed Jul 13, 2022 3:51 pm

APOD and General Astronomy Discussion Forum

https://asterisk.apod.com/

Like essentially all optical systems, the image produced by the telescope is round. The images as we see them are rectangular because the sensor is rectangular (like film in most cases), clipping out a section of the full image. The shape of the mirror or its segments doesn't matter.De58te wrote: ↑Wed Jul 13, 2022 3:33 pm Nice. What surprised me most is that the James Webb's images from its hexagonal mirrors still produces rectangular images with perfect 90 degree corners. Unlike my binoculars that has round lenses and it produces a round image. The explanation was for the old round camera lenses producing square images was because that is how the photo negative film was made. And of course science text books were rectangular and demanded rectangular photos. But nowadays when paper books are on the way out and most images are processed by computer there is no reason to still cut the round or hexagonal images into squares.

Aren't the round images simply automatically cropped by the film or sensor into the middle rectangular portion? And CCD sensors are also rectangular as far as I know. Are you saying why not have round sensors that precisely match the round image created by the lens? (I assume Webb still ultimately uses round lenses to focus the light from the array of hexagonal mirrors.)De58te wrote: ↑Wed Jul 13, 2022 3:33 pm Nice. What surprised me most is that the James Webb's images from its hexagonal mirrors still produces rectangular images with perfect 90 degree corners. Unlike my binoculars that has round lenses and it produces a round image. The explanation was for the old round camera lenses producing square images was because that is how the photo negative film was made. And of course science text books were rectangular and demanded rectangular photos. But nowadays when paper books are on the way out and most images are processed by computer there is no reason to still cut the round or hexagonal images into squares.

I take it they mean the same redshift, hence roughly the same distance.johnnydeep wrote: ↑Wed Jul 13, 2022 2:28 pm Ok, there is WAY too much stuff to talk about in this FIRST image from Webb. Hubble is now just a pile of garbage in comparison

A technical question: I don't understand why this linked-to pic of the spectra of two very similar looking lensed images proves they are one and the same galaxy:

Why couldn't the two similar spectra be from two separate but still very similar galaxies at the same distance?

there is a legend with RGB color codes for near infrared (NIR) and for mid-infrared (MIR)isoparix wrote: ↑Wed Jul 13, 2022 3:35 pm These images are, I suppose, false-colour representations of infrared data. How much has the spectrum been shifted upwards - two octaves? ten?

Which, in turn, seems to contradict the rather fussy replies I got earlier this year when I asked ( http://asterisk.apod.com/viewtopic.php ... 30#p320630) whether Webb output could be usefully depicted in visible spectrum.isoparix wrote: ↑Wed Jul 13, 2022 3:35 pm These images are, I suppose, false-colour representations of infrared data.

Hmm. I presume you mean that because back when the universe was a mere 380,000 years old (limited by the time of "last scattering"), it was much smaller. But even then, it would only see the galaxies on the edges of the limiting sphere, which even at a young 380,000 years old, would still be much larger than any single galaxy. Presumably. So, just how big was the universe at 380,000 years young?Fred the Cat wrote: ↑Wed Jul 13, 2022 6:21 pm If JWST looks far enough back, would it begin to see a set of the same first galaxies with the CMB beyond - where ever it is pointing?

Maybe that’s the obvious “point” I hadn’t imagined to be a JWST mission.

Still not convinced. Can't two different galaxies at the same distance show pretty much the same spectra (i.e., "chemistry")?VictorBorun wrote: ↑Wed Jul 13, 2022 4:11 pmI take it they mean the same redshift, hence roughly the same distance.johnnydeep wrote: ↑Wed Jul 13, 2022 2:28 pm Ok, there is WAY too much stuff to talk about in this FIRST image from Webb. Hubble is now just a pile of garbage in comparison

A technical question: I don't understand why this linked-to pic of the spectra of two very similar looking lensed images proves they are one and the same galaxy:

Why couldn't the two similar spectra be from two separate but still very similar galaxies at the same distance?

And the same chemistry also counts, yes.

And both seem to lie in the same smooth arc going around the midground massive cluster in the first place

...it usually helps, if you switch your browser. Edge should work ...johnnydeep wrote: ↑Wed Jul 13, 2022 2:32 pmI'm only seeing the comparison slider tool showing for the Carina nebula image. Are the others there too, but just not working for me perhaps?FLPhotoCatcher wrote: ↑Wed Jul 13, 2022 7:26 amHi all, long time, no see.AVAO wrote: ↑Wed Jul 13, 2022 5:14 am The level of detail in the WEBB images is absolutely stunning. What surprises me is the fact that the strongly redshifted galaxies from the early universe - that are now visible compared to HUBBLE - many of these are already highly structured.

...

jac berne (flickr)

The comparison tool here is great: https://johnedchristensen.github.io/WebbCompare/

I notice that the really red object left of, and a bit lower from the brightest star is completely not visible in the Hubble image. Why is it SO red?

I don't think that's what you asked. It looks like you were asking whether the results could be represented as something that would look approximately like what we'd see in visible light without redshift.Jim Leff wrote: ↑Wed Jul 13, 2022 6:18 pmWhich, in turn, seems to contradict the rather fussy replies I got earlier this year when I asked ( http://asterisk.apod.com/viewtopic.php ... 30#p320630) whether Webb output could be usefully depicted in visible spectrum.isoparix wrote: ↑Wed Jul 13, 2022 3:35 pm These images are, I suppose, false-colour representations of infrared data.

The answer, apparently, should have been “Yes, absolutely, no problem”

Thanks. IE and Edge both work. For some reason, my chosen browser Vivaldi, based on Chromium, doesn't. How annoying.AVAO wrote: ↑Wed Jul 13, 2022 7:25 pm...it usually helps, if you switch your browser. Edge should work ...johnnydeep wrote: ↑Wed Jul 13, 2022 2:32 pmI'm only seeing the comparison slider tool showing for the Carina nebula image. Are the others there too, but just not working for me perhaps?FLPhotoCatcher wrote: ↑Wed Jul 13, 2022 7:26 am

Hi all, long time, no see.

The comparison tool here is great: https://johnedchristensen.github.io/WebbCompare/

I notice that the really red object left of, and a bit lower from the brightest star is completely not visible in the Hubble image. Why is it SO red?

Who knows? I expect the universe has as many twists and turns as a coiled extension cord. Getting it straight becomes a matter of uncoiling its story and that’s " knot" my strongpoint.johnnydeep wrote: ↑Wed Jul 13, 2022 7:05 pmHmm. I presume you mean that because back when the universe was a mere 380,000 years old (limited by the time of "last scattering"), it was much smaller. But even then, it would only see the galaxies on the edges of the limiting sphere, which even at a young 380,000 years old, would still be much larger than any single galaxy. Presumably. So, just how big was the universe at 380,000 years young?Fred the Cat wrote: ↑Wed Jul 13, 2022 6:21 pm If JWST looks far enough back, would it begin to see a set of the same first galaxies with the CMB beyond - where ever it is pointing?

Maybe that’s the obvious “point” I hadn’t imagined to be a JWST mission.

The (largely consensus) cosmology model tells us the radius of the Universe has increased by about 10,000 times since then. So it was still a very, very large universe compared to the size of a galaxy.johnnydeep wrote: ↑Wed Jul 13, 2022 7:05 pmHmm. I presume you mean that because back when the universe was a mere 380,000 years old (limited by the time of "last scattering"), it was much smaller. But even then, it would only see the galaxies on the edges of the limiting sphere, which even at a young 380,000 years old, would still be much larger than any single galaxy. Presumably. So, just how big was the universe at 380,000 years young?Fred the Cat wrote: ↑Wed Jul 13, 2022 6:21 pm If JWST looks far enough back, would it begin to see a set of the same first galaxies with the CMB beyond - where ever it is pointing?

Maybe that’s the obvious “point” I hadn’t imagined to be a JWST mission. :|

Chris Peterson wrote: ↑Wed Jul 13, 2022 8:01 pmI don't think that's what you asked. It looks like you were asking whether the results could be represented as something that would look approximately like what we'd see in visible light without redshift.Jim Leff wrote: ↑Wed Jul 13, 2022 6:18 pmWhich, in turn, seems to contradict the rather fussy replies I got earlier this year when I asked ( http://asterisk.apod.com/viewtopic.php ... 30#p320630) whether Webb output could be usefully depicted in visible spectrum.isoparix wrote: ↑Wed Jul 13, 2022 3:35 pm These images are, I suppose, false-colour representations of infrared data.

The answer, apparently, should have been “Yes, absolutely, no problem”

I'm not correcting you. I'm pointing out what I thought you asked. And on review, it still seems that way. So I guess I don't know what you were asking. But the replies were all reasonable.Jim Leff wrote: ↑Thu Jul 14, 2022 12:38 amChris Peterson wrote: ↑Wed Jul 13, 2022 8:01 pmI don't think that's what you asked. It looks like you were asking whether the results could be represented as something that would look approximately like what we'd see in visible light without redshift.Jim Leff wrote: ↑Wed Jul 13, 2022 6:18 pm

Which, in turn, seems to contradict the rather fussy replies I got earlier this year when I asked ( http://asterisk.apod.com/viewtopic.php ... 30#p320630) whether Webb output could be usefully depicted in visible spectrum.

The answer, apparently, should have been “Yes, absolutely, no problem”

Chris, your expertise knows no bounds. You are correcting me re: my own intention with my own question.

I was aware at the time that you'd failed to grok what I was asking, and that you were resistant to my clarification (no harm, no foul, we all appreciate your answers). And now I salute your staunch steadfastness in maintaining that my question was precisely what you originally decided it was. Backbone is good.

But I'd meekly offer the proposal that I may (or may not!) be the higher authority on my own intentions. I do realize this creates a zero sum dilemma.

The answer is simple, I think. The mirrors focus light into one or more of the collectors, and they are rectangular. Here’s a rectangular pic of one of them… RobDe58te wrote: ↑Wed Jul 13, 2022 3:33 pm Nice. What surprised me most is that the James Webb's images from its hexagonal mirrors still produces rectangular images with perfect 90 degree corners. Unlike my binoculars that has round lenses and it produces a round image. The explanation was for the old round camera lenses producing square images was because that is how the photo negative film was made. And of course science text books were rectangular and demanded rectangular photos. But nowadays when paper books are on the way out and most images are processed by computer there is no reason to still cut the round or hexagonal images into squares.

Why didn't they use a sensor that better matched the actual view, or at least use a bigger one, with no reduction is resolving power? That seems like a great way to get significantly more "free" data.rstevenson wrote: ↑Thu Jul 14, 2022 1:26 amThe answer is simple, I think. The mirrors focus light into one or more of the collectors, and they are rectangular. Here’s a rectangular pic of one of them… E86C69B2-BFED-42FA-83A9-F0D08ADEDC10.jpegDe58te wrote: ↑Wed Jul 13, 2022 3:33 pm Nice. What surprised me most is that the James Webb's images from its hexagonal mirrors still produces rectangular images with perfect 90 degree corners. Unlike my binoculars that has round lenses and it produces a round image. The explanation was for the old round camera lenses producing square images was because that is how the photo negative film was made. And of course science text books were rectangular and demanded rectangular photos. But nowadays when paper books are on the way out and most images are processed by computer there is no reason to still cut the round or hexagonal images into squares.

Rob

What makes you think the sensors don't cover all of the actual view? That they aren't matched in both pixel size and area to the optics?FLPhotoCatcher wrote: ↑Thu Jul 14, 2022 4:51 amWhy didn't they use a sensor that better matched the actual view, or at least use a bigger one, with no reduction is resolving power? That seems like a great way to get significantly more "free" data.rstevenson wrote: ↑Thu Jul 14, 2022 1:26 amThe answer is simple, I think. The mirrors focus light into one or more of the collectors, and they are rectangular. Here’s a rectangular pic of one of them… E86C69B2-BFED-42FA-83A9-F0D08ADEDC10.jpegDe58te wrote: ↑Wed Jul 13, 2022 3:33 pm Nice. What surprised me most is that the James Webb's images from its hexagonal mirrors still produces rectangular images with perfect 90 degree corners. Unlike my binoculars that has round lenses and it produces a round image. The explanation was for the old round camera lenses producing square images was because that is how the photo negative film was made. And of course science text books were rectangular and demanded rectangular photos. But nowadays when paper books are on the way out and most images are processed by computer there is no reason to still cut the round or hexagonal images into squares.

Rob

Oh wow. You'll have to do with my very shaky infrared "expertise". (Yeah, using the word "expertise" here is a joke.)isoparix wrote: ↑Wed Jul 13, 2022 3:35 pm These images are, I suppose, false-colour representations of infrared data. How much has the spectrum been shifted upwards - two octaves? ten?

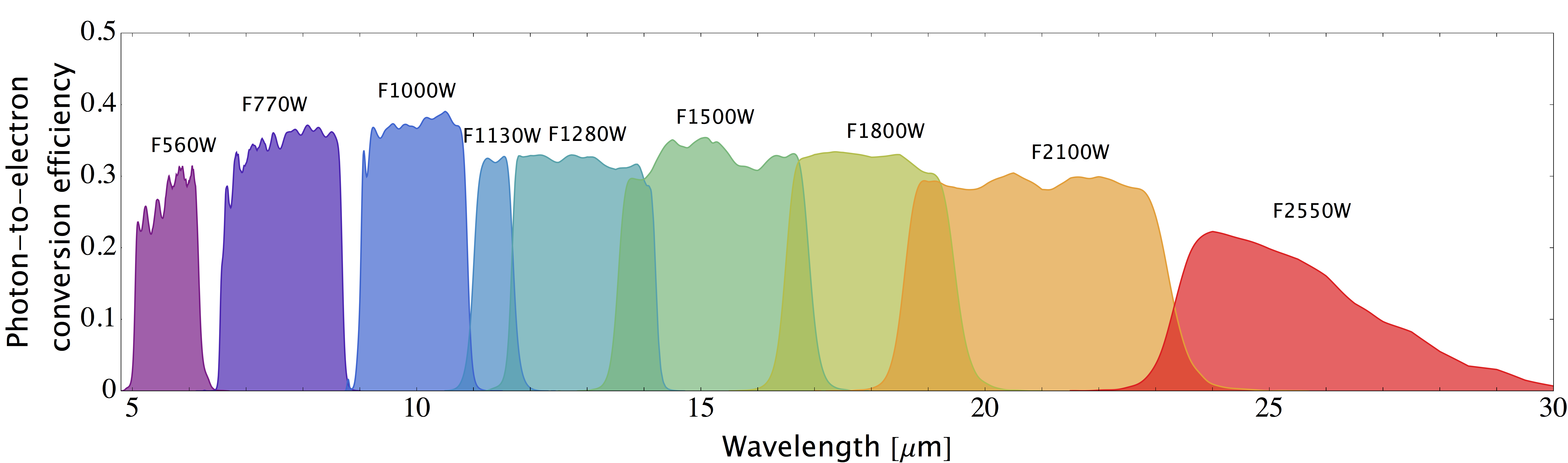

The JWST NIRCam is the camera detecting the shortest infrared wavelengths. There is another camera too, which I think is called MIRI (Mid Infrared Instrument) (but you'll have to google, because I'm too lazy), which detects longer, mid infrared wavelengths.JWST User Documentation wrote:

JWST NIRCam offers 29 bandpass filters in the short wavelength (0.6–2.3 μm) and long wavelength (2.4–5.0 μm) channels.

As Rob said, "The answer is simple, I think. The mirrors focus light into one or more of the collectors, and they [the collectors] are rectangular."Chris Peterson wrote: ↑Thu Jul 14, 2022 5:00 amWhat makes you think the sensors don't cover all of the actual view? That they aren't matched in both pixel size and area to the optics?FLPhotoCatcher wrote: ↑Thu Jul 14, 2022 4:51 amWhy didn't they use a sensor that better matched the actual view, or at least use a bigger one, with no reduction is resolving power? That seems like a great way to get significantly more "free" data.rstevenson wrote: ↑Thu Jul 14, 2022 1:26 am

The answer is simple, I think. The mirrors focus light into one or more of the collectors, and they are rectangular. Here’s a rectangular pic of one of them… E86C69B2-BFED-42FA-83A9-F0D08ADEDC10.jpeg

Rob

The corrected field at the focal plane is round. So yes, if you used a round detector, you could get more coverage than using a square one. But those pixels would hardly be "free". In fact, there's really no way to make a round detector because of the way that rows and columns are read. And cutting a round section from a semiconductor crystal? Really difficult! So what you'd need to do is make an oversized square detector, and it would have its corners outside the well corrected area of the focal plane. You could either use those pixels at reduced resolution, or ignore them. (Most ordinary cameras have worse resolution in the corners because of this.) And, of course, that larger sensor with more pixels would be MUCH more expensive. So they really are using the most effective, practical method.FLPhotoCatcher wrote: ↑Thu Jul 14, 2022 7:12 amAs Rob said, "The answer is simple, I think. The mirrors focus light into one or more of the collectors, and they [the collectors] are rectangular."Chris Peterson wrote: ↑Thu Jul 14, 2022 5:00 amWhat makes you think the sensors don't cover all of the actual view? That they aren't matched in both pixel size and area to the optics?FLPhotoCatcher wrote: ↑Thu Jul 14, 2022 4:51 am

Why didn't they use a sensor that better matched the actual view, or at least use a bigger one, with no reduction is resolving power? That seems like a great way to get significantly more "free" data.

I think the incoming light would be round-ish, and the collector is rectangle. I have no proof one way or the other though.